In today’s post, I’ll take an in-depth look at Random Forests, one of the most popular and effective algorithms in the data science toolkit. I’ll describe what I learned about how they work, their components and what makes them tick.

What Are Random Forests?

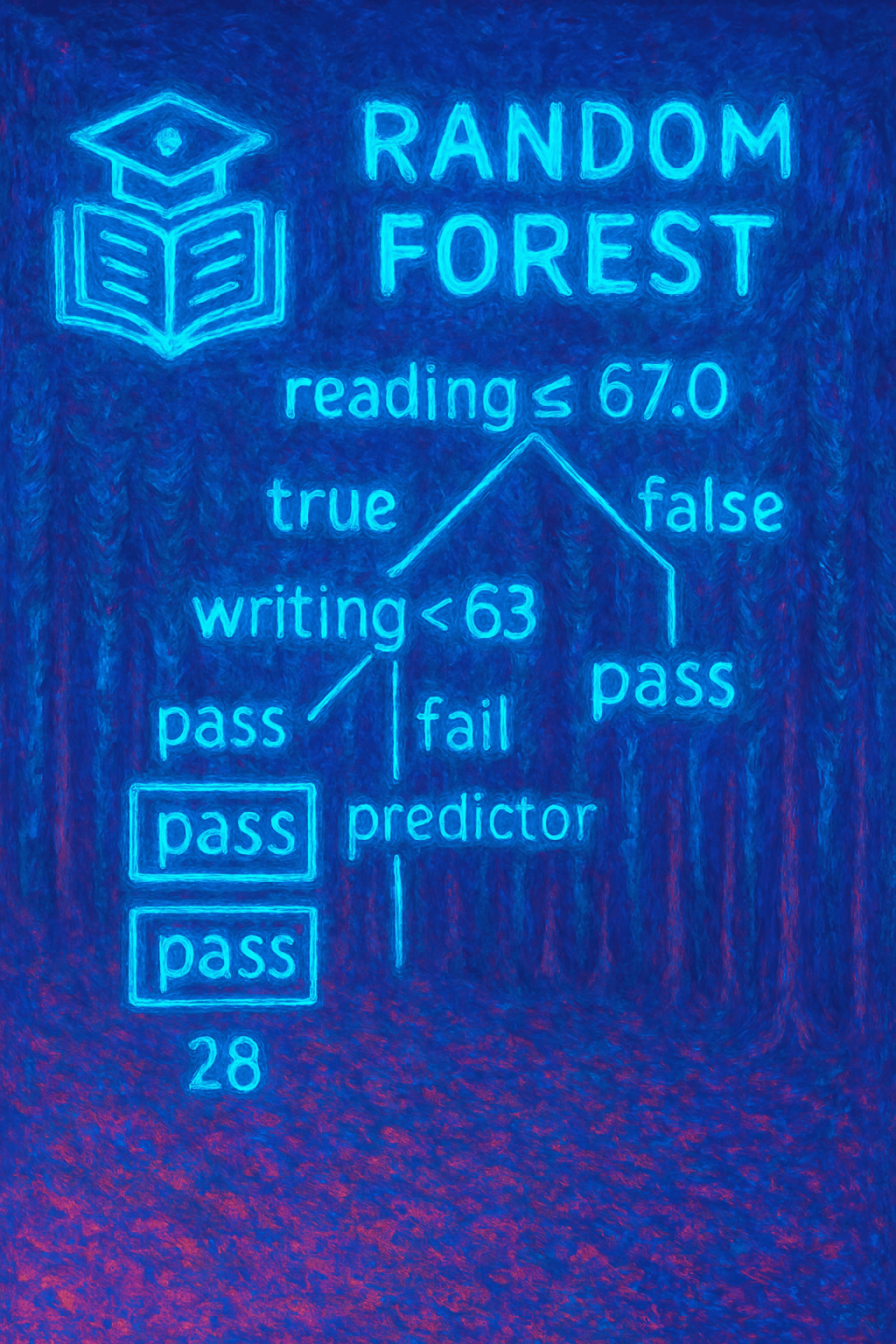

At its heart, a random forest is an ensemble of decision trees working together.

- Decision Trees: Each tree as a model that makes decisions by splitting data based on certain features.

- Ensemble Approach: Instead of relying on a single decision tree, a random forest builds many trees from bootstrapped samples of your data. The prediction from the forest is then derived by averaging (for regression) or taking a majority vote (for classification).

This approach reduces the variance typical of individual trees and builds a robust model that handles complex feature interactions with ease.

The Magic Behind the Method

1. Bootstrap Sampling

Each tree in the forest is trained on a different subset of data, selected with replacement. This process, known as bagging (Bootstrap Aggregating), means roughly 37% of your data isn’t used in any tree. This leftover data, the out-of-bag (OOB) set, can later be used to internally validate the model without needing a separate validation set.

2. Random Feature Selection

At every decision point within a tree, instead of considering every feature, the algorithm randomly selects a subset. This randomness:

- De-correlates Trees: Each tree becomes less alike, ensuring that the ensemble doesn’t overfit or lean too heavily on one feature.

- Reduces Variance: Averaging predictions across diverse trees smooths out misclassifications or prediction errors.

3. Aggregating Predictions

For classification tasks, each tree casts a vote for a class, and the class with the highest number of votes becomes the model’s prediction.

For regression tasks, predictions are averaged to produce a final value. This collective approach generally results in higher accuracy and more stable predictions.

Out-of-Bag (OOB) Error

An important feature of random forests is the OOB error estimate.

- What It Is: Each tree is trained on a bootstrap sample, leaving out a set of data that can serve as a mini-test set.

- Why It Counts: Aggregating predictions on these out-of-bag samples can offer an estimate of the model’s test error.

This feature can be really handy, especially when you’re working with limited data and want to avoid setting aside a large chunk of it for validation.

Feature Importance

Random forests don’t just predict, they can also help you understand your data:

- Mean Decrease in Impurity (MDI): This measure tallies how much each feature decreases impurity (based on measures like the Gini index) across all trees.

- Permutation Importance: By shuffling features and measuring the drop in accuracy the importance of a feature can be measured. This is meant to help when you need to interpret the model and communicate which features are most influential.

Pros and Cons

Advantages:

- Can handle Non-Linear Data: Naturally captures complex feature interactions.

- Can handle Noise & Outliers: Ensemble averaging minimizes overfitting.

- Doesn’t need a lot of Preprocessing: No need for extensive data scaling or transformation.

Disadvantages:

- Can be Memory Intensive: Storing hundreds of trees can be demanding.

- Slower than a single Tree: Compared to a single decision tree, the ensemble approach require more processing power.

- Harder to Interpret: The combination of multiple trees makes it harder to interpretability compared to individual trees.

Summary

Random Forests are a powerful next step in my journey. With their ability to reduce variance through ensemble learning and their built-in validation mechanisms like OOB error, they offer both performance and insight.

In my next post, I’ll share how I apply the Random Forest technique to this data set: https://www.kaggle.com/datasets/whenamancodes/students-performance-in-exams/data

– William

Leave a comment